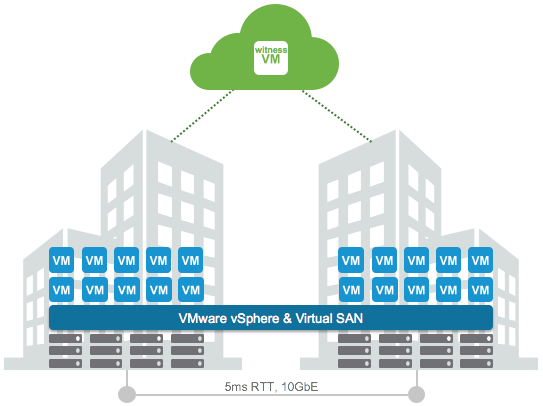

Logical Diagram of VMware vSAN Stretched Cluster

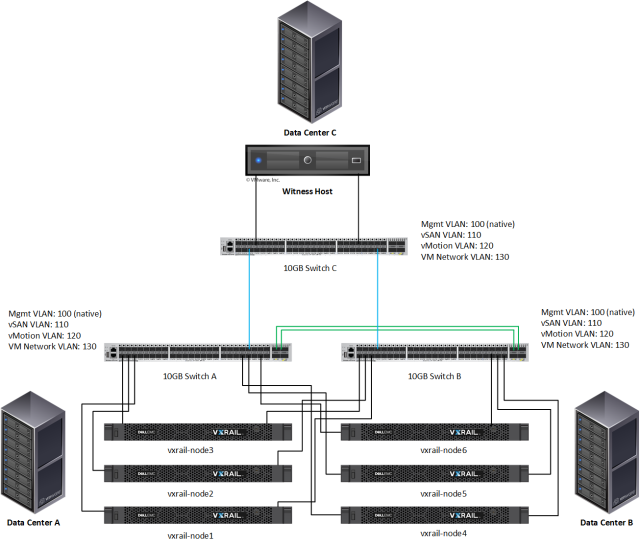

Physical Diagram of VMware vSAN Stretched Cluster

Last week I deployed a test environment of VMware vSAN Stretched Cluster which is running on Dell EMC VxRail Appliance. In this post we will describe how to setup VMware vSAN Stretched Cluster on Dell EMC VxRail Appliance. Above figure is the high level of physical system diagram. In site A/B there are six VxRail Appliances and two 10GB Network Switch which are interconnected by two 10GB links, and each VxRail Appliance has one 10GB uplink connects to each Network Switch. In site C, there are one vSAN Witness host and one 10GB Network Switch. For the details of configuration of each hardware equipment in this environment, you can reference the followings.

Site A (Preferred Site)

3 x VxRail E460 Appliance

Each node includes 1 x SSD and 3 x SAS HDD, 2 x 10GB SFP+ ports

1 x 10GB Network switch

3 x VxRail E460 Appliance

Each node includes 1 x SSD and 3 x SAS HDD, 2 x 10GB SFP+ ports

1 x 10GB Network switch

Site B (Secondary Site)

3 x VxRail E460 Appliance

Each node includes 1 x SSD and 3 x SAS HDD, 2 x 10GB SFP+ ports

1 x 10GB Network switch

3 x VxRail E460 Appliance

Each node includes 1 x SSD and 3 x SAS HDD, 2 x 10GB SFP+ ports

1 x 10GB Network switch

Site C (Remote Site)

1 x vSAN witness host, 2 x 10GB SFP+ ports

1 x 10GB Network switch

1 x vSAN witness host, 2 x 10GB SFP+ ports

1 x 10GB Network switch

Top-of-Rack Switch A/B/C

Mgmt 192.168.10.x/24, VLAN 100 (Native)

vSAN 192.168.11.x/24, VLAN 110

vMotion 192.168.12.x/24, VLAN 120

VM Network 192.168.13.x/24, VLAN 130

Mgmt 192.168.10.x/24, VLAN 100 (Native)

vSAN 192.168.11.x/24, VLAN 110

vMotion 192.168.12.x/24, VLAN 120

VM Network 192.168.13.x/24, VLAN 130

VMware and EMC SoftwareVxRail Manager 4.5.070

VMware vCenter 6.5 U1 Appliance

VMware ESXi 6.5 U1

VMware vSAN 6.6 Enterprise

VMware vSAN Witness Host 6.5

VMware vCenter 6.5 U1 Appliance

VMware ESXi 6.5 U1

VMware vSAN 6.6 Enterprise

VMware vSAN Witness Host 6.5

NOTE: For production network planning, please check the details of “VxRail Planning Guide for Virtual SAN Stretched Cluster”. The above network design is only used for a test environment.

https://www.emc.com/collateral/white-papers/h15275-vxrail-planning-guide-virtual-san-stretched-cluster.pdf

https://www.emc.com/collateral/white-papers/h15275-vxrail-planning-guide-virtual-san-stretched-cluster.pdf

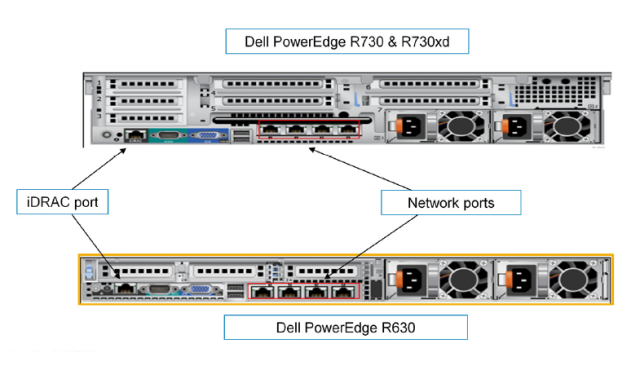

NOTE: For the best practice, iDRAC port on VxRail requires to connect to the extra 1GB Network Switch.

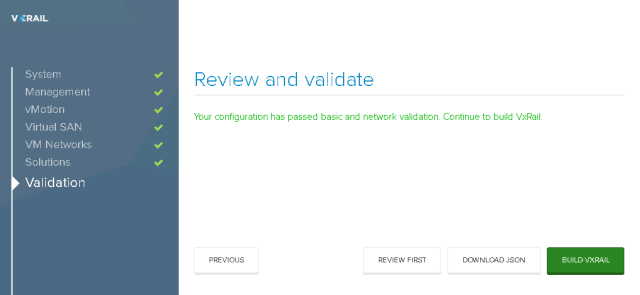

When all of the physical network connections and requirement are ready at each site, we can start to build up the VxRail cluster. Before the VxRail installation, please note that we need to predefine the required network IP address and VLAN in each 10GB Network Switch, ie ESXi Mgmt, vMotion, vSAN and VM Network. For the details of VxRail network design, please check “Dell EMC VxRail Network Guide”.

https://www.emc.com/collateral/guide/h15300-vxrail-network-guide.pdf

https://www.emc.com/collateral/guide/h15300-vxrail-network-guide.pdf

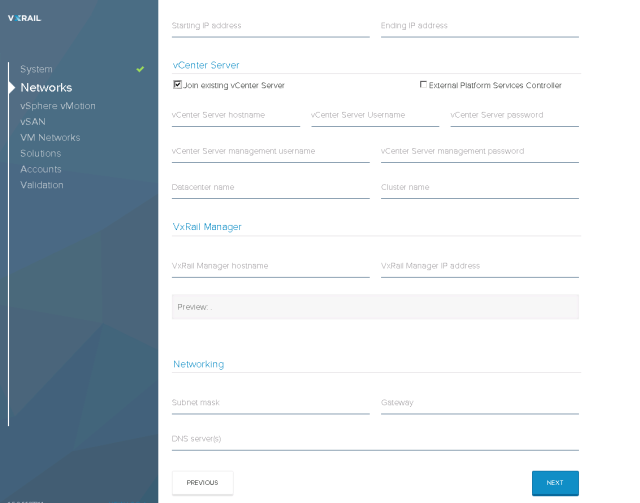

Browse to the VxRail Manager IP address and start to initial the configuration.

For vSAN stretched cluster deployment on VxRail, we need to use external vCenter Server.

When the validation of all the requirements can be passed, we can build VxRail cluster.

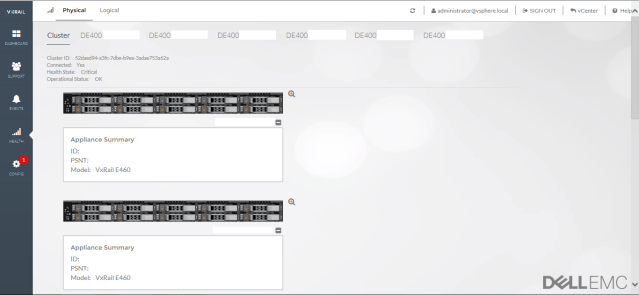

After the VxRail cluster build up successfully, we can access the VxRail Manager now.

After it build up VxRail cluster, we login into vCenter with vSphere Web client. We can see it has different network port groups in vDS on each node. The following table shows VxRail traffic on the E Series 10GbE NICs is separated as follows:

Then we need to prepare the one vSAN Witness host at Site C, it is the OVA file. We can download this OVA file at VMware website.

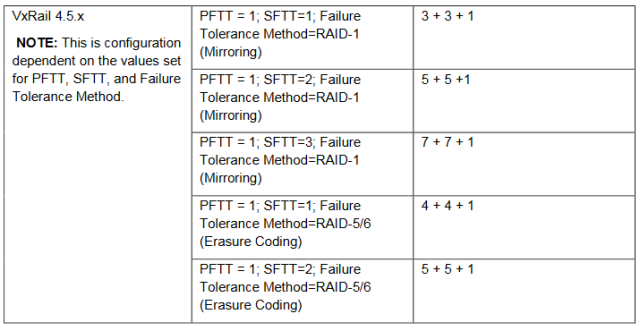

The following is VxRail Release Compatibility Table. In this test environment, we will setup vSAN stretched cluster (3+3+1).

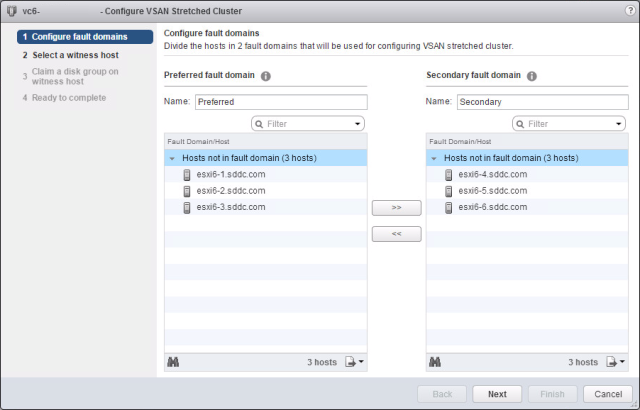

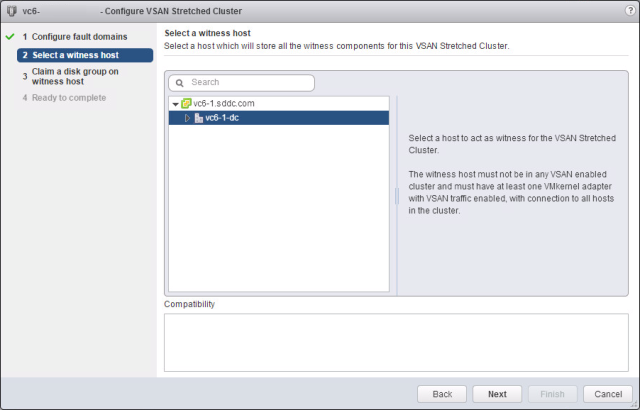

When all nodes and witness host are ready, now we start to configure the vSAN Stretched Cluster now. First we configure the two fault domains, preferred site and secondary site. Each fault domain includes three VxRail node. Then select the host at site C as witness host. Finally select the cache HDD and capacity HDD, it starts to build up vSAN Stretched Cluster automatically.

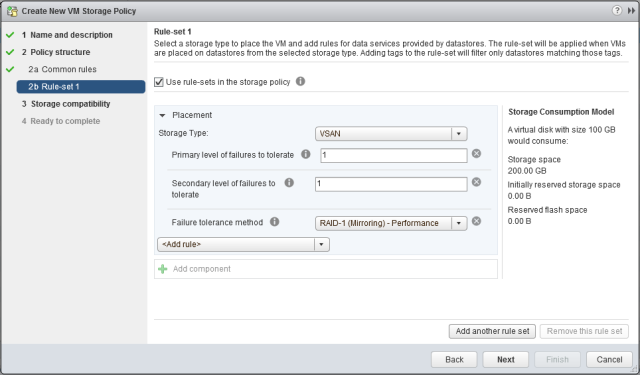

When vSAN Stretched Cluster is ready on VxRail Cluster, we can see there are six hosts are running in one ESXi cluster, the witness host is running at other data center. Then we go to the next configuration, create the vSAN storage policy to protect the virtual machine. Since this vSAN Stretched Cluster is (3+3+1), it can be only supported RAID-1 mirroring protection. For RAID-5/6 (Erasure Coding), it requires more VxRail nodes at preferred site and secondary site, please reference the above VxRail Release Compatibility Table for details.

The VM Storage Policies for R1 (PFTT=1, SFTT=1, Failure tolerance method=RAID-1)

Primary level of failures to tolerate =1 ( specific data will be replicated into fault domain )

Secondary level of failures to tolerate = 1 ( specific the local protection )

Failure tolerance method = RAID-1 (Mirroring) ( Specific R1 protection at both sites )

Secondary level of failures to tolerate = 1 ( specific the local protection )

Failure tolerance method = RAID-1 (Mirroring) ( Specific R1 protection at both sites )

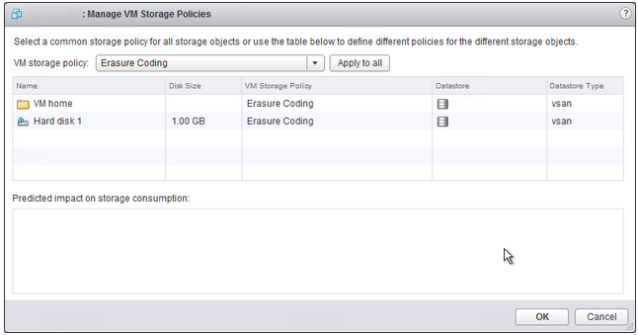

The VM Storage Policies for R5/6 (PFTT=1, SFTT=2, Failure tolerance method=RAID-5/6)

Primary level of failures to tolerate =1 ( specific data will be replicated into fault domain )

Secondary level of failures to tolerate = 2 ( specific the local protection )

Failure tolerance method = RAID-5/6 ( Specific Erasure Coding )

Secondary level of failures to tolerate = 2 ( specific the local protection )

Failure tolerance method = RAID-5/6 ( Specific Erasure Coding )

When you created the VM storage policies, then you can apply the storage polices into the virtual machines.

Vsphere Total: Dell Emc Vxrail – Vmware Virtual San Stretched Cluster >>>>> Download Now

ReplyDelete>>>>> Download Full

Vsphere Total: Dell Emc Vxrail – Vmware Virtual San Stretched Cluster >>>>> Download LINK

>>>>> Download Now

Vsphere Total: Dell Emc Vxrail – Vmware Virtual San Stretched Cluster >>>>> Download Full

>>>>> Download LINK